Mini GPT Explorer 🚀

Journey inside Large Language Models and discover how AI generates text, one token at a time

The

future

is

AI

Understanding Large Language Models 🧠

Large Language Models are AI systems trained on vast amounts of text to understand and generate human-like language. They use transformer architecture to process sequences and predict the next word with remarkable accuracy.

Evolution of LLMs

Key Concepts

Internal Mechanics ⚙️

Tokenization Demo 🔤

See how text is broken down into tokens that the model can process.

Word Embeddings 🧮

Explore how words are represented as vectors in high-dimensional space.

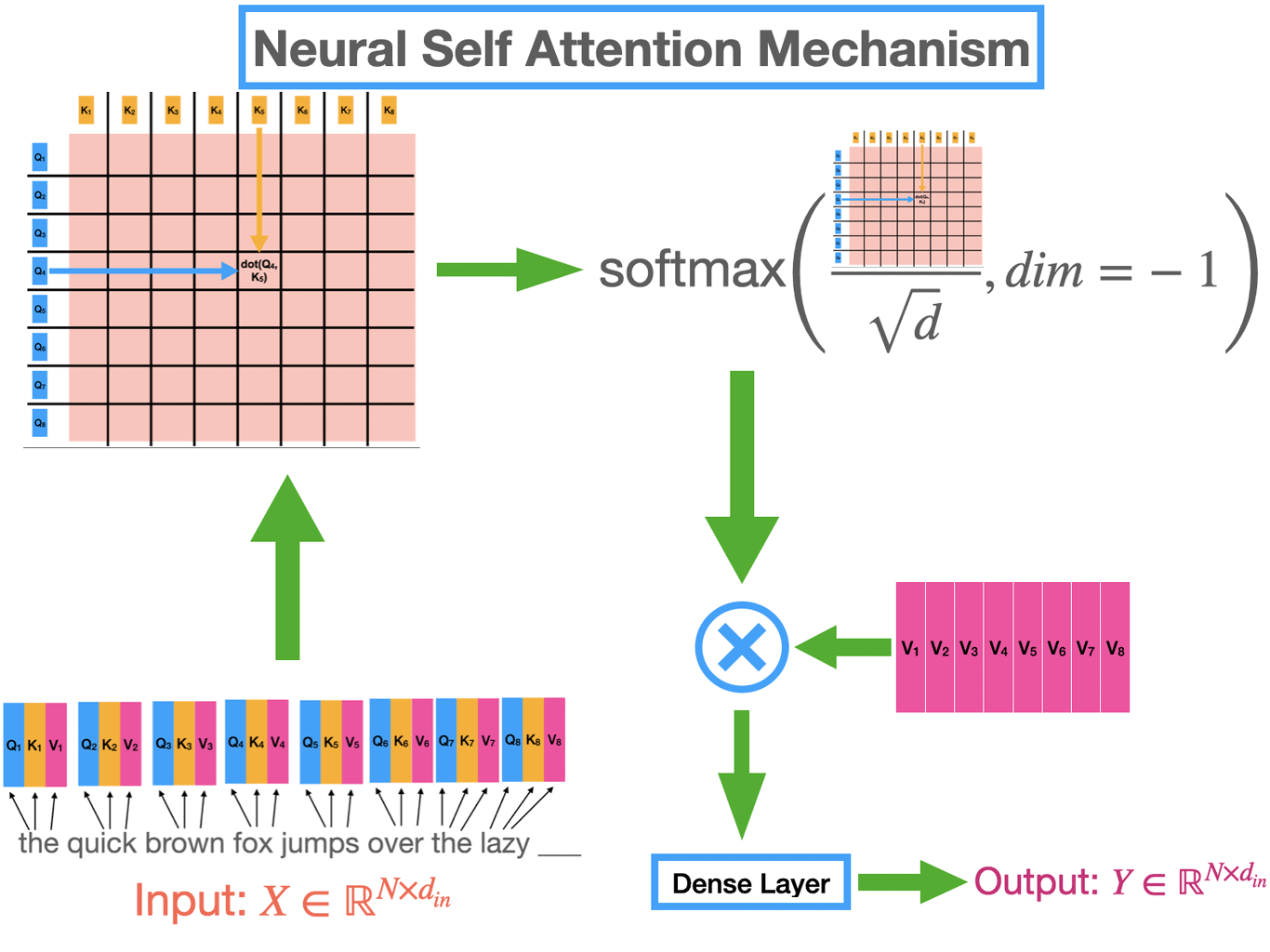

Attention Mechanism 👁️

Understand how the model focuses on relevant parts of the input.

Transformer Architecture 🏗️

Interactive diagram of the transformer layers.

Token Embedding

Converts tokens to vectors

Multi-Head Attention

Focuses on relevant context

Feed Forward

Processes attended information

Output Layer

Generates next token probabilities

Content Generation Process ⚡

Decoding Strategies

Temperature Control 🌡️

Low Temperature (0.2)

The sun is bright and beautiful today.

High Temperature (1.8)

The sun dances wildly through cosmic dreams of tomorrow.

Step-by-Step Generation

Mini GPT Simulator 🤖

Configuration

Test Your Knowledge 🎯

Question 1 of 3